Join us for the CASA RC Trust Summer School 2025, an engaging and insightful event focusing on Cyber and Societal Security in the AI Era. Hosted at Ruhr-Universität Bochum (Germany) from June 23 to 27, 2025, this summer school provides an excellent opportunity for students and researchers to deepen their understanding of security challenges and solutions in the age of artificial intelligence.

Who Can Participate?

The summer school is open to:

- Master's students

- PhD students

- Postdoctoral researchers

- Advanced bachelor's students close to graduation

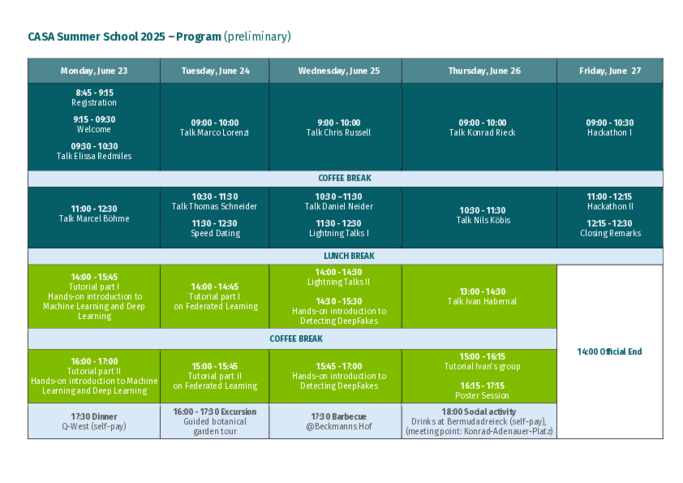

Program Highlights

- Thorough lectures and discussions on cybersecurity, AI-driven threats, and societal security

- Detailed sessions led by renowned researchers in the field

- Interactive segments designed to promote collaboration and knowledge exchange

How to Apply?

Registration is closed.

![[Translate to Englisch:] CASA Summer School 2024 Menschen an Tisch](/fileadmin/_processed_/f/b/csm_SummerSchool_Bild5_e29b0252c0.jpg)

Group activity at the Summer School 2023. Copyright: CASA/ Meyer

Program

Copyright: private

Marco Lorenzi

(Centre Inria d’Université Côte d’Azur)

This lecture will illustrate the principles of federated learning with a focus on healthcare applications. We will explore the key challenges associated with the federated learning paradigm, including learning with heterogeneous and incomplete data across clients, requiring the development of unbiased strategies for effective model aggregation. Additionally we will discuss the problem of federated unlearning, which arises when a client wishes to remove their contribution from a jointly trained model, and how this can be effectively achieved without compromising the integrity of the model or revealing sensitive information about the client's data. Specifically we will examine the challenges of identifying and removing the influence of a particular client's data on the global model and explore potential solutions inspired by differential privacy. Finally, we will analyze software solutions available to address these challenges, highlighting the successful implementation of advanced federated analysis systems for multimodal imaging data in the fields of oncology and neuroimaging.

Copyright: private

Elissa Redmiles

(Georgetown University)

"Safe(r) Digital Intimacy: Lessons for Internet Governance & Digital Safety"

The creators of sexual content face a constellation of unique online risks. In this talk I will review findings from over half a decade of research I've conducted in Europe and the US on the use cases, threat models, and protections needed for intimate content and interactions. We will start by discussing what motivates for the consensual sharing of intimate content in recreation ("sexting") and labor (particularly on OnlyFans, a platform focused on commercial sharing of intimate content). We will then turn to the threat of image-based sexual abuse, a form of sexual violence that encompasses the non-consensual creation and/or sharing of intimate content. We will discuss two forms of image-based sexual abuse: the non-consensual distribution of intimate content that was originally shared consensually and the rising use of AI to create intimate content without people's consent. The talk will conclude with a discussion of how these issues inform broader conversations around internet governance, digital discrimination, and safety-by-design for marginalized and vulnerable groups.

Copyright: private

Thomas Schneider

(Technical University of Darmstadt)

„Private Machine Learning via Multi-Party Computation"

Privacy-preserving machine learning (PPML) protocols allow to privately evaluate or even train machine learning models on sensitive data while simultaneously protecting the data and the model. In this talk, I will start with some historic works in the rapidly growing field of PPML up to very recent results. Among the several available techniques enabling PPML such as homomorphic encryption, trusted execution environments, differential privacy and Multi-Party Computation (MPC), I will put most emphasis on MPC. The main message and running theme of this presentation will be that PPML is much more than just evaluating or training neural networks under encryption. I will give an overview on a large variety of research works with special focus on those by the ENCRYPTO Group at TU Darmstadt and will conclude with an outlook on future directions in the area of PPML.

Copyright: Fuchs/ TU Berlin

Konrad Rieck

(Technical University Berlin)

“Attacks on Machine Learning Backends”

The security of machine learning is typically discussed in terms of adversarial robustness and data privacy. Yet beneath every learning-based system lies a complex layer of infrastructure: the machine learning backends. These hardware and software components are critical to inference, ranging from GPU accelerators to mathematical libraries. In this talk, we examine the security of these backends and present two novel attacks. The first implants a backdoor in a hardware accelerator, enabling it to alter model predictions without visible changes. The second introduces Chimera examples—a new class of inputs that produce different outputs from the same model depending on the linear algebra backend. Both attacks reveal a natural connection between adversarial learning and systems security, broadening our notion of machine learning security.

Marcel Böhme

(MPI-SP)

"Benchmarks are our measure of progress. Or are they?"

How do we know how well our tool solves a problem, like bug finding, compared to other state-of-the-art tools? We run a benchmark. We choose a few representative instances of the problem, define a reasonable measure of success, and identify and mitigate various threats to validity. Finally, we implement (or reuse) a benchmarking framework, and compare the results for our tool with those for the state-of-the-art. For many important software engineering problems, we have seen new sparks of interest and serious progress made whenever a (substantially better) benchmark became available. Benchmarks are our measure of progress. Without them, we have no empirical support to our claims of effectiveness. Yet, time and again, we see practitioners disregard entire technologies as "paper-ware"---far from solving the problem they set out to solve. In this keynote, I will discuss our recent efforts to systematically study the degree to which our evaluation methodologies allow us to measure those capabilities that we aim to measure. We shed new light on a long-standing dispute about code coverage as a measure of testing effectiveness, explore the impact of the specific benchmark configuration on the evaluation outcome, and call into question the actual versus measured progress of an entire field (ML4VD) just as it gains substantial momentum and interest.

Copyright: University of Oxford

Chris Russel

(University of Oxford)

"AGI: Artificial Good-enough Intelligence?"

Much of the recent work in the governance of AI treats LLMs as a precursor to a superhuman general intelligence and focuses on mitigating the harms that such intelligences could provide. Instead, we look at LLMs as they are now, and ask: where can they be used in their current form; what harms can arise from their use; and what can we do to improve their behaviour.

Copyright: RUB, Marquard

Ivan Habernal

(Research Center Trustworthy Data Science and Security)

"A concise introduction to privacy-preserving natural language processing"

In this lecture and tutorial we will address some fundamental techniques for protecting privacy in the field of natural language processing. We will start with some philosophical thoughts on what privacy and secrets are, continue with ad-hoc techniques for text anonymization, and look into formal probabilistic treatment relying on the framework of differential privacy for protecting training data of neural networks. We will finish with some open fundamental questions, so you can spend the rest of the day wrapping your head around them. In the hands-on tutorial, we will touch the surface with some tools for

privacy-preserving NLP, mostly in the realm of Python and neural nets.

Copyright: RC Trust

Nils Koebis

(Research Center Trustworthy Data Science and Security)

"How Artificial Intelligence influences Human Ethical Behavior"

As machines powered by artificial intelligence (AI) influence humans’ behavior in ways that are both like and unlike the ways humans influence each other, worry emerges about the corrupting power of AI agents.

Dealing with this emerging worry, in this talk, I will present a short overview of the available evidence from behavioral science, human-computer interaction, and AI research on how AI influences human ethical behavior and how psychological science can help to mitigate these new risks.

As a theoretical contribution, I propose a conceptual framework that outlines four main social roles through which machines can influence ethical behavior. These are role models, advisors, partners, and delegates1.

As empirical contributions, I present recently published and unpublished experimental work testing the roles of advisors and delegates. These results (stemming from > N = 9,000 participants) show that (a) when AI agents are moral advisors, their corrupting power matches that of humans2, (b) when they assist people in lie detection, they massively increase accusation rates3, and (c) when AI agents become delegates, people willingly delegate unethical behavior to them – although the interface through which people delegate plays a big role too4.

As a policy contribution, the talk will conclude by summarizing behavioral insights testing, which commonly proposed interventions (e.g., in the most recent proposal for the EU AI ACT) successfully mitigate the risks of AI corrupting ethical behavior5 and sketch an agenda for interdisciplinary behavioral AI safety research.

1 - Köbis, N., Bonnefon, J. F., & Rahwan, I. (2021). Bad machines corrupt good morals. Nature Human Behaviour, 5(6), 679-685.

2 - *Leib, M., *Köbis, N., Rilke, R. M., Hagens, M., & Irlenbusch, B. (2024). Corrupted by algorithms? how ai-generated and human-written advice shape (dis) honesty. The Economic Journal, 134(658), 766-784.

3 - von Schenk, A., Klockmann, V., Bonnefon, J. F., Rahwan, I., & Köbis, N. (2024). Lie detection algorithms disrupt the social dynamics of accusation behavior. iScience, 27(7).

4 - *Köbis, *Rahwan, Ajaj, Bersch, Bonnefon, & Rahwan (Working Paper) Experimental evidence that delegating to intelligent machines can increase dishonest behaviour.

5 - Köbis, N., Starke, C., & Rahwan, I. (2022). The promise and perils of using artificial intelligence to fight corruption. Nature Machine Intelligence, 4(5), 418-424.

Copyright: RC Trust

Daniel Neider

(Research Center Trustworthy Data Science and Security)

"A gentle Introduction to Neural Network Verification"

Artificial Intelligence has become ubiquitous in modern life. This "Cambrian explosion" of intelligent systems has been made possible by extraordinary advances in machine learning, especially in training deep neural networks and their ingenious architectures. However, like traditional hardware and software, neural networks often have defects, which are notoriously difficult to detect and correct. Consequently, deploying them in safety-critical settings remains a substantial challenge. Motivated by the success of formal methods in establishing the reliability of safety-critical hardware and software, numerous formal verification techniques for deep neural networks have emerged recently. These techniques aim to ensure the safety of AI systems by detecting potential bugs, including those that may arise from unseen data not present in the training or test datasets, and proving the absence of critical errors. As a guide through the vibrant and rapidly evolving field of neural network verification, this talk will give an overview of the fundamentals and core concepts of the field, discuss prototypical examples of various existing verification approaches, and showcase state-of-the-art tools.

Location

The Summer School 2025 has two different locations. Both are located on the campus of the Ruhr-University Bochum.

On days 1-4 (June 23. - 26.) the Summer School takes place at the Veranstaltungszentrum. Directions with public transport and by car.

Veranstaltungzentrum, Level 04, Room 3.

Universitätsstraße 150

44801 Bochum

The last day (June 27) takes place at Beckmanns Hof. Directions with public transport and by car.

Beckmanns Hof

Universitätsstraße 150

44801 Bochum

Program Chairs

Review of Past Summer Schools

Summer School crypt@b-it 2024

Hands-on learning is a cornerstone of academic growth, and this was also the focus of this year's Summer School crypt@b-it 2024. From August 26 to 30, early-career researchers gathered at Ruhr University Bochum's "Beckmanns Hof" to explore a range of topics in cryptography.

The event featured speakers Prof. Léo Ducas (Leiden University), Anca Nitulescu (Input Output Global, Paris), and Maximilian Gebhardt (German Federal Office for Information Security, BSI). It was organized by the Gesellschaft für Informatik (GI), Fachgruppe Kryptologie (FG Krypto), CASA, and the b-it (Bonn-Aachen International Center for Information Technology), in collaboration with the International Association for Cryptologic Research (IACR).

A more detailed insight can be found here

CASA Summer School 2023 on Software Security

Creativity is always part of science, and this year's CASA Summer School on Software Security was no exception. The three-day event took place from August 14-16 at the Beckmanns-Hof of the Ruhr University Bochum (RUB) under the chairmanship of the CASA PIs Prof. Kevin Borgolte, Prof. Marcel Böhme and Prof. Alena Naiakshina.

The program included not only classical talks where international speakers gave exciting insights into their research. A Capture the Flag Contest, a Science Escape Game and a Summer Pitch gave participants the opportunity to engage with the topic in a playful way.

Invited to Bochum were Prof. Eric Bodden (Heinz Nixdorf Institute at the University of Paderborn and Fraunhofer IEM), Prof. Helen Sharp (The Open University), Prof. Fish Wang (Arizona State University), Prof. Andreas Zeller (CISPA Helmholtz Center for Information Security), and Prof. Verena Zimmermann (ETH Zurich). In addition, Dr. Tamara Lopez (The Open University) and Fabian Schiebel (Fraunhofer Institute for Mechatronics Design IEM) gave concurrent tutorials.

Diverse and engaging presentations

Helen Sharp kicked off the Summer School program with a keynote on "Secure code development in practice: the developer's point of view". The first day continued with a spotlight on the human factor in cyber security: In her keynote "Human-Centered Security: Focusing on the human in IT security and privacy research", Verena Zimmermann reflected on the psychological aspects of cyber security and their consideration in the design of usable security and privacy solutions. On the second day, the attendees gained exciting insights into "Language Based Fuzzing", which was addressed by Andreas Zeller from CISPA. Eric Bodden presented his research in his keynote on "Managing the Dependency Hell - Challenges and Current Approaches to Software Composition Analysis" and provided a deeper understanding of the subject.

"For me, the CASA Summer School was a successful event due to the diverse and engaging presentations and tutorials by excellent researchers and the active interaction of highly qualified and motivated participants," summarizes Alena Naiakshina. In her opinion, summer schools are always a good opportunity for early career researchers to meet leading experts in their respective fields and to foster interdisciplinary perspectives and the development of new ideas.

Security Exit Game for a playful approach

To generate ideas at all, you have to be creative - as in the playful approach in the form of a card-based security exit game presented by speaker Prof. Verena Zimmermann from ETH Zurich. The game is part of a research project that Zimmermann is working on with her student Linda Fanconi. "Various studies have shown that people find cybersecurity important, but on the other hand it has many negative associations: too complex, sometimes scary, and at the same time too boring. We developed the game to give people a positive approach to the topic," explains Zimmermann. At the CASA Summer School, participants were able to try out the prototype and experience not only the fun of the game, but also the practical aspects of human-centered security.

Networking in a relaxed atmosphere

Besides the mix of topics like testing, fuzzing and developer-centered security presented by the high-ranking speaker the venue Beckmannshof contributed to the participants' positive experience, says Naiakshina. Attendee Kushal Ramkumar from the University College Dublin was also pleased by the variety of topics: "The CASA Summer School is very well organized and I learned a lot about topics I didn't know so well before."

Whether at the evening BBQ or during the guided tour of the adjacent botanical garden: There was plenty of opportunity to network in a relaxed atmosphere. "Events like the Summer School are very important to me to keep myself up-to-date with the latest research. Of course, you can learn a lot from literature, but in a setting like this, you can speak directly to the authors and get their thoughts on a topic. These conversations might spark interesting collaborations and also provide some new perspectives on my own research," says Kushal Ramkumar.

Copyright: CASA

Copyright: CASA, Mareen Meyer

Scientific coordination team, Copyright: CASA, Mareen Meyer

Impressions from the CASA Summer School 2023

Copyright: CASA, Mareen Meyer

Copyright: CASA, Mareen Meyer

Copyright: CASA, Mareen Meyer

Review: CASA Summer School 2022 On Blockchain Security

The CASA Summer School 2022 was held from June 20-23 at the Beckmannshof conference center at the RUB. Focus of the event was the topic "Blockchain Security". About 40 participants enjoyed exciting lectures, worked together in hands-on sessions, and used the opportunity to network.

Among the speakers at the Summer School were Elli Androulaki (IBM Research), Ghassan Karame (CASA), Lucas Davi (Universität Duisburg-Essen) und Clara Schneidewind (Max-Planck-Institut for Security and Privacy).

You will find the detailed reporting on the event here.

Copyright: CASA, Fabian Riediger

Impressions from the CASA Summer School 2022

![[Translate to Englisch:] Ghassan Karame speaks at the CASA Summer School 22.](/fileadmin/_processed_/b/2/csm_Summer_School_Speaker_Karame_6570081e3c.jpg)

Ghassan Karame on Bitcoin Security. Copyright: CASA, Fabian Riediger

![[Translate to Englisch:] Die CASA Summer School 22 war eine gute Gelegenheit zum Netzwerken.](/fileadmin/_processed_/3/3/csm_DSC_5635klein_c4140b8d02.jpg)

Copyright: CASA, Fabian Riediger

Participants of the CASA Summer School 22. Copyright: CASA, Fabian Riediger

Contact

Johanna Luttermann

CASA Graduate School

(0)234-32-29230

Johanna.Luttermann(at)rub.de